The realm of computation is poised for a profound shift, potentially overshadowing the current enthusiasm surrounding AI. Novel technological advancements are set to reshape our methods of information processing, data retention, and human-machine interaction.

Beyond AI: the next frontier in computing

While artificial intelligence has captured significant attention and funding in recent years, specialists caution that the subsequent major transformation in computing could emerge from entirely distinct breakthroughs. Quantum computing, neuromorphic processors, and cutting-edge photonics are some of the technologies positioned to profoundly reshape the realm of information technology. These developments offer not only enhanced processing capabilities but also fundamentally novel approaches to tackling challenges that conventional computers find difficult to resolve.

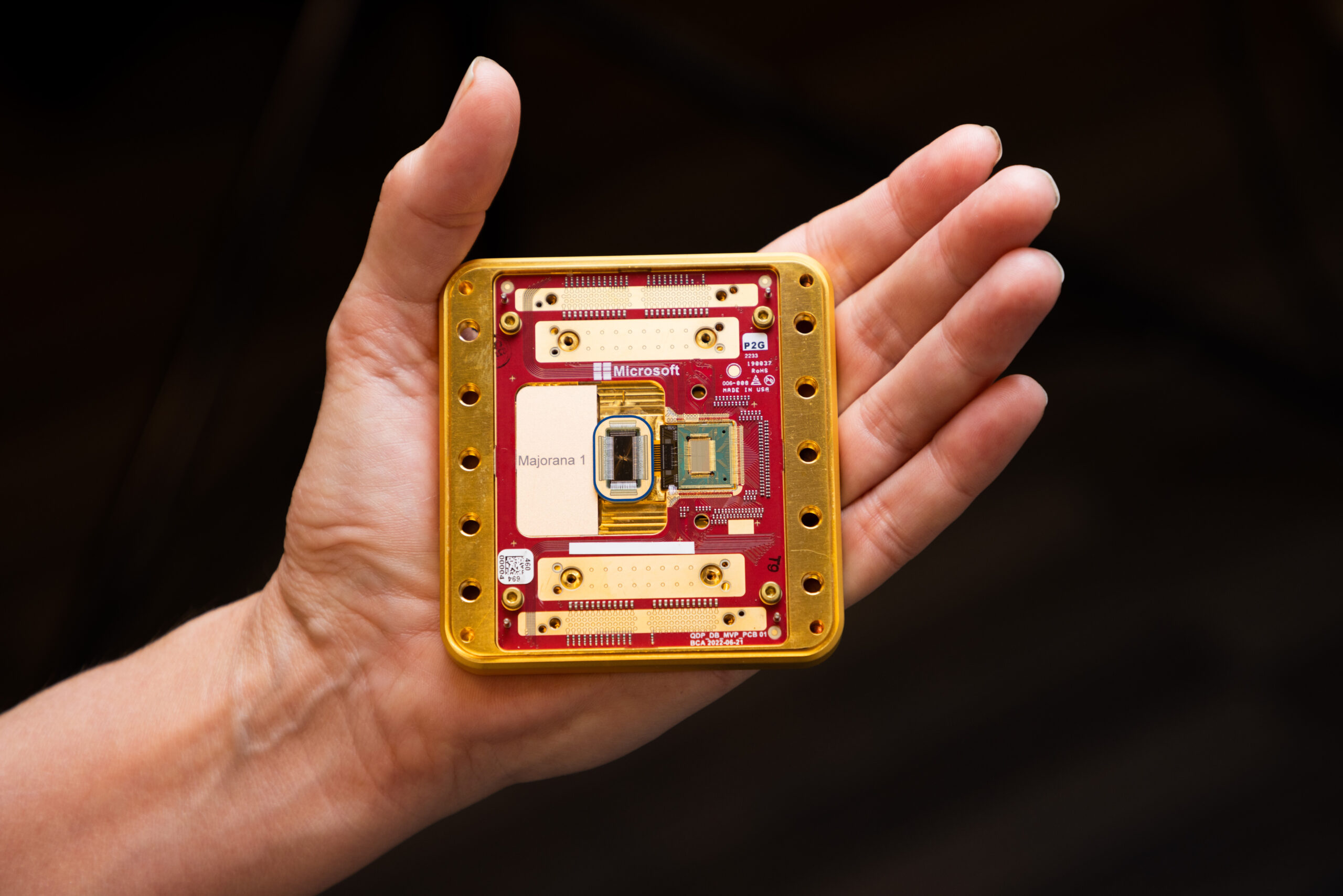

Quantum computing, specifically, has garnered worldwide interest due to its capacity to execute intricate computations well beyond the scope of conventional computers. In contrast to standard computers, which utilize bits as either ones or zeros, quantum computers depend on qubits capable of existing in several states concurrently. This feature enables them to process enormous datasets, enhance intricate systems, and resolve challenges in cryptography, materials science, and pharmaceuticals with unparalleled swiftness. Although practical, large-scale quantum devices are still under development, current experiments are already showcasing benefits in specialized uses like molecular modeling and climate simulations.

Neuromorphic computing represents another promising direction. Inspired by the human brain, neuromorphic chips are designed to emulate neural networks with high energy efficiency and remarkable parallel processing capabilities. These systems can handle tasks like pattern recognition, decision-making, and adaptive learning far more efficiently than conventional processors. By mimicking biological networks, neuromorphic technology has the potential to revolutionize fields ranging from robotics to autonomous vehicles, providing machines that can learn and adapt in ways closer to natural intelligence than existing AI systems.

The rise of photonics and alternative computing architectures

Photonics, or the use of light to perform computations, is gaining traction as an alternative to traditional silicon-based electronics. Optical computing can transmit and process data at the speed of light, reducing latency and energy consumption while dramatically increasing bandwidth. This technology could prove essential for data centers, telecommunications, and scientific research, where the volume and velocity of information are growing exponentially. Companies and research institutions worldwide are exploring ways to integrate photonics with conventional circuits, aiming to create hybrid systems that combine the best of both worlds.

Other novel methods, like spintronics and molecular computation, are also appearing. Spintronics utilizes the electron’s quantum spin property for data storage and manipulation, potentially offering memory and processing power superior to existing hardware. Molecular computing, which employs molecules for logical operations, presents the possibility of shrinking components past the boundaries of silicon chips. These technologies are still mostly in the experimental phase, yet they underscore the vast innovation occurring in the quest for computing beyond AI.

Societal and Industrial Ramifications

The impact of these new computing paradigms will extend far beyond laboratory research. Businesses, governments, and scientific communities are preparing for a world where problems previously considered intractable can be addressed in hours or minutes. Supply chain optimization, climate modeling, drug discovery, financial simulations, and even national security operations stand to benefit from faster, smarter, and more adaptive computing infrastructure.

The pursuit of advanced computing power is a worldwide endeavor. Countries like the United States, China, and the nations comprising the European Union are allocating substantial resources to R&D initiatives, acknowledging the critical role of technological dominance. Private enterprises, ranging from established technology behemoths to agile new ventures, are likewise expanding the limits, frequently in partnership with academic bodies. This rivalry is fierce, yet it is simultaneously fueling swift advancements that have the potential to reshape entire sectors over the coming ten years.

As computing evolves, it may also change how we conceptualize human-machine interaction. Advanced architectures could enable devices that understand context more intuitively, perform complex reasoning in real time, and support collaborative problem-solving across multiple domains. Unlike current AI, which relies heavily on pre-trained models and vast datasets, these new technologies promise more dynamic, adaptive, and efficient solutions to a range of challenges.

Preparing for a post-AI computing landscape

For both enterprises and government bodies, the advent of these technological advancements brings forth a dual landscape of prospects and hurdles. Businesses will be compelled to re-evaluate their IT infrastructure, allocate resources for staff development, and seek collaborations with academic entities to harness pioneering breakthroughs. Concurrently, governments are tasked with devising regulatory structures that guarantee ethical deployment, robust cybersecurity, and fair distribution of these revolutionary technologies.

Education will also be a crucial factor. Equipping the upcoming cohort of scientists, engineers, and analysts to engage with quantum systems, neuromorphic processors, and photonics-driven platforms will necessitate substantial revisions to academic programs and skill acquisition. Interdisciplinary expertise—merging physics, computer science, materials science, and practical mathematics—will be indispensable for individuals entering this domain.

Meanwhile, ethical considerations remain paramount. Novel computing frameworks have the potential to exacerbate current disparities if their availability is restricted to specific geographical areas or organizations. Decision-makers and tech innovators are tasked with harmonizing the pursuit of progress with the imperative to guarantee that the advantages of sophisticated computing are distributed equitably throughout society.

The future of AI and its implementations

Although artificial intelligence continues to capture global attention, it is only part of a larger wave of technological advancement. The next era of computing may redefine what machines can do, from solving intractable scientific problems to creating adaptive, brain-inspired systems capable of learning and evolving on their own. Quantum, neuromorphic, and photonic technologies represent the frontier of this shift, offering speed, efficiency, and capabilities that transcend today’s digital landscape.

As the frontiers of what’s achievable broaden, scientists, businesses, and authorities are getting ready to operate in an environment where computational strength ceases to be a constraint. The upcoming ten years might bring about a monumental technological transformation, altering how people engage with data, devices, and their surroundings—a period where computation itself evolves into a revolutionary power, extending far beyond the influence of artificial intelligence.